Signals

An exploration of the past, current and future

The Riff Project's technology is centered around a novel approach called the "The Defederation Model", enabling decentralized, censorship resistant, collaborative infrastructure that serves websites and media at scale. We use OrbitDB and IPFS to create a more resilient and cheaper web infrastructure that anyone can join, while promoting open-source contributions and cooperation. Defederation builds a collaborative network with gossip where websites can operate independently and interconnect, ensuring data persists even if individual sites go down.

These technologies enable everything from Libraries (think Netflix, Spotify, Archive.org) to direct company-to-company collaboration (working on things together) and more, ultimately building towards a generalised system that can work to help anyone work together better with anyone on anything that can be represented as files on a computer (which covers more than you think!).

Our technologies are permissive - anyone can use them - open - completely available for study and adaptation by all - and neutral - no token, no catch, just unstoppable Libraries and more, built on a foundation of free and open source technology but useful across everything from personal websites to enterprise.

Lenses can join with other lenses to build bigger things. We'll explore all that here.

Foreword

Today we're going to introduce a radically simple set of ideas, flipping the usual way we think about building things on the Internet.

We'll talk about ways to enable use cases that are a natural fit for technology we have already built and proven to work. A streaming portal for media, built on technologies that in their totality provide a solid foundation for Immortal Libraries. And what else is possible.

To do this, we created something we now call The Defederation Model.

We'll get into what Defederation is in a second.

We'll talk about the other use cases that could quickly be added to this foundation, and how every single use case makes it more compelling, more likely to be generally useful to everyone, and our model for creating a positive feedback of people funding use cases that suit them, including models for organisations and enterprises to contribute to the effort in a way that provides immediate, tangible benefits to them.

Ultimately, this is a system designed to solve problems, get better and better, reward early, mid and late adopters, and to do so in a way that everyone can understand and participate in.

Simple goals

We want to make a system that uses novel ideas and technology to make it easier and easier for people, companies, governments, and other organisations to collaborate.

Building from simple first concepts, we will show a system that logically and mathematically builds towards a self sustaining ecosystem anyone can take part in, that anyone can contribute to and benefit from, that is flexible and easy to understand when put in the right terms, and is compelling enough to draw more and more value into it, creating a positive feedback loop.

Unlike past systems like that, this is explicitly designed with neutrality and fairness in mind, with a focus on creating a system that is maximally fair and neutral, with a focus on actually solving problems and making it easier for people to work together, and making it easier for people to make money by working together.

There is no authority, no central points of failure, no centre to the project - not even Riff.CC, The Riff Project or Riff Labs is ultimately necessary to make this system work once we have built an ecosystem that is self sustaining.

How does this let you build a different kind of website - a lens - and from that a different kind of internet - and even maybe something more?

The implications get wild.

The Defederation Model

The core of this entire concept is a cooperative protocol called the Defederation Protocol. Much in the way "copyleft" uses copyright to further freedom instead of limit it, Defederation exploits the concept of federation in an unintuitive inverse trade-off, to further freedom instead of limiting it.

This is achieved with a combination of a cooperative protocol and a new kind of network structure.

It might be most useful, however, to start by thinking about what a normal internet website might look like.

The Library shall not fall again.

Every library in history has fallen or, at some point, will fall.

However, digital libraries should in theory be impervious - as something that can be copied endlessly, why should we ever accept the total loss of one?

While that is a noble and reasonable thought, it does not reflect reality.

Data is hard to move, by definition, and metadata even harder - then databases and user data even worse. In its totality, shifting a website just among its own owner from one side of the United States to the other should be as easy as a matter of minutes, but in practice it is a monumental task, for a company of any sufficient size. And maybe even especially for small ones.

So when a website is threatened, what happens? Could you build the immortal Library - with the capital L?

How?

A Normal Website

Let's explore a simple website - ftwc.xyz. In reality, ftwc.xyz is our test lens, used for helping prove the Defederation Model and Protocol, but in this example, we'll pretend it's a normal website.

ftwc.xyz - "for those who create" - is a website about creativity.

We'll say they have 1 million users.

It has fifty thousand videos on it. With an average size of about 4GiB each, this means it has to keep track of about 200TiB of data. This is a significant expense for a normal website - and it's only going to get bigger.

Any seriously large video website will have even bigger problems than this, but you can already tell this is going to be a bag of hurt in terms of cost.

If they need to keep useful copies of the website active, they'll need block storage - not object storage. Object storage is a good solution in a lot of cases, but for ftwc.xyz, it's a non-starter. They have to encode their videos sometimes, and that means S3 becomes a pain, not a blessing. This is a fancy way of just explaining they need the expensive stuff.

Furthering that, they have to cope with sustained bursts of heavy traffic from legitimate users. So they need to keep a lot of servers online.

Thirty 1U servers, each within a 42U rack, could be a pretty normal setup for a website of this size.

On top of that, they might even resort to distributed storage, such as Ceph, increasing cost and complexity further.

At least three loadbalancers are required to reach a quorum that allows them to keep everything online even if a machine fails. They have to get enteprise grade everything, and invest in extremely expensive network cards. Extremely expensive SSDs.

Not only that, they need to keep enough copies of all of these videos to keep them at useful, stable speeds even with lots of people trying to watch them at once.

They need a CDN like Cloudflare to keep them up and online through high load and DDoS attacks.

And they need to keep track of all of this metadata, and the databases that make it all work.

So they need database servers - and they'll need three for MySQL or PostgreSQL and just about any database with consistency and high availability.

They'll need webservers, and of course, three - for high availability.

They'll need to have, at minimum, triple redundant networking gear, absolutely ridiculous levels of redundancy at every level - remember, with a million users, the stakes are high.

Heck, they even need Ceph storage, because their use case is very specific. So it gets even harder, because not only is Ceph complicated - it's practically a fulltime job to keep it running.

So they invest, and invest, and invest and tune, and one day, at the busiest peak of the season, their website goes down.

The database server just failed, and they have no redundancy. Not only that, they might not even have backups.

In short...

And that wasn't even the point we actually need to talk about - the cost!

Triple redundancy at every level, and you're looking at a quarter of a million dollars a year. Just to keep the lights on.

That may not even include staffing, and other costs. Administration overhead. Security training and services. Firewalls. Enterprise licenses for everything. VMware or OpenStack.

So great, they either go out of business, or hopefully they had backups - which, thank goodness, they did.

But now they have to rebuild.

And so this website, which as a reminder consists of:

- 50,000 videos

- 30 webservers

- 3 loadbalancers

- 3 database servers

- 3 racks of servers

- 10 Ceph servers (to run the storage - at an approximate cost of $100,000 a year)

- 200TiB of data plus replication (~500TiB total, costing $100,000 a year alone)

So redundant, so powerful, designed never to fail and now...

Has to be rebuilt.

So The Normal Website Rebuilds

They restore everything, but when they bring the site back up, they have another problem. Nothing will load. They look into it and quickly realize the problem - someone moved the files. Everything is now on a different server, and they don't even know which one - or why - and now they're down until they figure out where it is.

At this point they start crawling their entire infrastructure to try and find out what happened. Eventually they find the files and restore them, but by this point it's an international incident. Governments actually had private contracts with this website to host training videos and other important internal video material for their employees. Now they're down, and angry.

What went wrong?

Well, it's mostly this:

https://myfavouritewidgets.corporate/files/videos/wp-20240920-final-final6.mp4

Specifically, it's this bit - myfavouritewidgets.corporate - and this bit - /files/videos/ - and this bit https - and this bit - wp-20240920-final-final6.mp4 - because, are you getting it yet 😱 any of these changing in any way at any time will break the entire website.

If it's a file that matters, boom, you have an outage and pissed off users. 🙀

So what can we do differently here?

Enter Challenger 1: BitTorrent

BitTorrent provides a partial improvement to this. By allowing people to directly help participate in hosting and distributing the files for my website, now I can strike off some cost.

Instantly, nearly half of the cost is gone.

That's huge! But you still have a major problem.

If the main website with the BitTorrent tracker goes down, here's what happens:

- The website goes down

- The BitTorrent tracker goes down

- The files might technically still be out there, but someone has to go find them on their hard drive and manually re-seed and re-upload them to a new tracker.

- If the servers go down, everything stops.

It's closer to a better library, and is an improvement, but ultimately still very vulnerable.

Enter Challenger 2: Cloud Storage

Cloud storage claims to be a solution to all problems, but as some like to say - "it's just someone else's computer".

It has all of the same challenges as the traditional website, but with an added cost and complexity. It may even have lock-in exit fees.

Enter Challenger 3: IPFS

The InterPlanetary File System provides a fascinating partial improvement.

You can use IPFS to distribute data amongst your community, then as long as anyone who has a CID (essentially just a representation of a file, a hash*) is online and reasonably reachable for you, you can access the file.

Moreover, you can run an IPFS Gateway, and now users can access any file on IPFS (or just files you've pinned to your gateway, whichever is preferred) through standard HTTPS.

This is great! But you still need to run 3 silly servers! And 3 loadbalancers! And 3 routers! And you need to keep track of all the CIDs!

(* Technically, a CID is not just a hash, it's a hash of a Merkle DAG, but that's not important for this explanation. And in practice, just think of it as a hash. It's fine.)

It means that technically even if your site was to be destroyed, your users could still access the files - at least for a time - but only if they had saved all the CIDs for all the files, and only if the users who had the files realised keeping their IPFS nodes running would help and was a good idea.

In practice, even this wouldn't help much.

And on top of that, keeping regular dumps of all the website's files, code and data would become onerous, and may even risk exposing PII if the regex isn't perfect. And now you're relying on others to be keeping recent backups of the site.

Can we build further from here?

Enter Unusual Suspect 1: The Traditional CDN

Bunny.net (unaffiliated with Riff, we're just fans 😊) provides a very good overlay over IPFS, and allows you to use a CDN to access your files.

This means any slowdown caused by IPFS doing retrievals over Bitswap can be papered over by the CDN, and you can continue to use your existing website and infrastructure.

Since CIDs are fixed once created (unless upgrading to a new hashing algorithm or CID version), you can just set the caching time to a year. Now people must be more careful, increasing your team's base stress level.

But you've now got a system that is orders of magnitude cheaper, orders of magnitude easier to manage, and orders of magnitude more resilient.

So now we've made our site fast in any region, which is a huge improvement, but we've also made it so that we can survive almost any black swan event.

Enter Unusual Suspect 2: OrbitDB

OrbitDB is a database protocol built on top of IPFS.

It allows you to talk in a common language to other peers - browsers, runnning IPFS and OrbitDB - allowing you to host your entire website's database - the database itself and everything about the site - on a decentralised group of volunteers. Essentially, using the site, you donate a little bit of your compute's bandwidth to act as a sort of distributed storage layer.

At this point, we now have a system that is orders of magnitude cheaper, orders of magnitude easier to manage, and orders of magnitude more resilient. Adding OrbitDB effectively means that we don't technically need anything to be hosted by us, except for these remaining components:

- "Meet-me" server (WebRTC Relay) to allow for direct connections between users

- A CDN to act as a cache layer for the website

- Three IPFS gateways to allow dialing content

Total cost to run a site at this point is not only less than $1000 a year, but could plausibly be less than $150 a year.

Now you've got something that's cheaper to run, easier to manage, and more resilient than anything else on the market that isn't also doing the same tricks.

But there's two final flourishes, because ultimately we haven't solved the biggest issue with the website.

Us.

The Cluster

Even if we went to this point, we still don't even have a library that we can sustain yet.

Simply put, we can use IPFS Cluster - this allows us to not only maintain a list of "pins" of content to keep available and online via IPFS on our own servers and nodes, but also allows anyone else who wanted to, to be able to join and help distribute files.

Launching an IPFS Cluster is easy, and essentially allows your users to help act as the true hosts of your website.

Plus, Orbiter will soon be upgraded to distribute pinning of the OrbitDB database and data itself, allowing for total resilience.

This is close. We're not there yet, but we're close.

The Lens

Ultimately, we've built a very resilient library at this point, but it will NOT, no matter how much we improve it, outlive us - without serious manual intervention, one day this library will fall like any other.

This is where Defederation comes in. Instead of a website, we decided we now have a Lens.

A Lens is simply a way to make your own website or platform (or app!) by being able to split, merge and fork the work of others.

The main thing we wanted to resolve is fairly simple:

- It's very likely Creative Commons would love a platform like Riff.CC (we've had some conversations and they loved it years ago before we were cool!)

- We want to help contribute massively to the Creative Commons ecosystem.

- But they don't need another website that someone else runs.

- Something that someone else can upload things to

- And get them in trouble if they do something wrong

How can we do what Riff.CC was originally intended for, that Unstoppable Library concept, but without the need to rely on us?

Simple - we make us irrelevant and yet helpful. Let me explain.

With Defederation, now we can make Riff.CC a Lens for ourselves. It will contain nothing but our site running on Orbiter, with all metadata stored in OrbitDB using Constellation, and all the files in IPFS.

What's special is what happens for Creative Commons now. They can simply load our Lens, go the settings and grab our site ID (a Defederation key), log into their owns Lens as an administrator, and then "follow" or "trust" our Lens.

This is real technology, simple to implement, and it works.

Here's what happens next, as shown by what we consider our first "true" Defederation implementation:

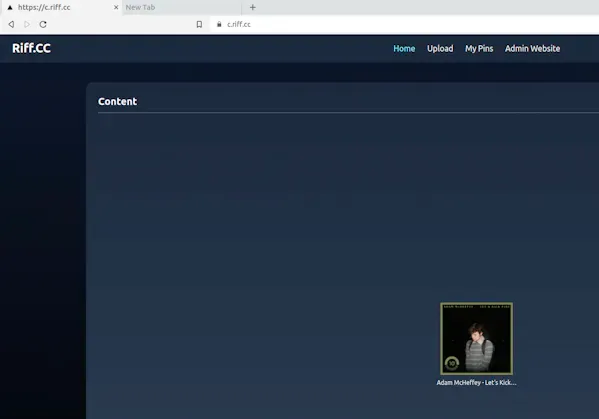

From "c.riff.cc"'s perspective - just a normal Lens, running Orbiter, and you can't really tell it's in IPFS and OrbitDB at all...

Normal so far, right?

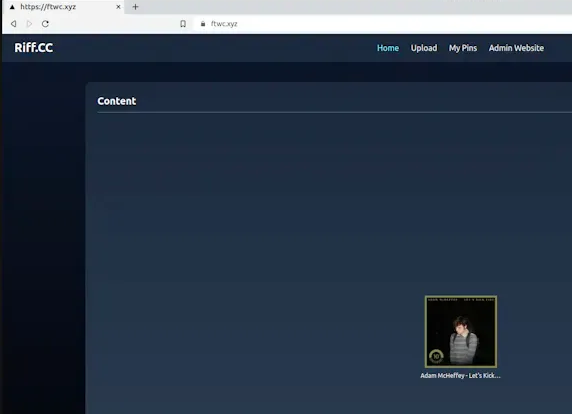

Here's an entirely different Lens, running on a different server, on a different continent.

And it doesn't have any of the files or content at all stored on it.

We:

- Uploaded an album - Adam McHeffey's "Let's Kick Fire" - to our Lens, Riff.CC

- Uploaded a different pin on FTWC.xyz (our demo lens)

- On FTWC.xyz, we then "follow" Riff.CC

- And suddenly...

Both lenses have Riff.CC's content, but technically it only "exists" on Riff.CC's Lens.

Both sites remain sovereign and completely separate and independent, but you already can't really see any difference between them.

In fact, behind the scenes, either site can actually change and edit the content on their site - if it differs from the lens it comes from (such as if FTWC is deleting a pin that Riff.CC has uploaded, or vice versa) then only the lens making the change will be affected - preserving the original.

We then did the same thing in reverse, uploading a pin to FTWC.xyz, and then having Riff.CC follow it.

Simply put, we're now a Lens, just our view of the world - but we can change what we see.

If we want to, we can upload new stuff to our Lens and it will appear on both places!

If we don't do a great job, now FTWC can remove items or even choose to defederate from us by unfollowing.

Now we have a system where we're completely separate from each other, and yet we act as one. FTWC and Riff.CC could be literally and completely different, run by different people, from different continents, and yet this would operate as one.

Moreover, now if you got rid of either Lens, everything would be preserved by the other!

This is finally the Unstoppable Library - not by being uncensorable through thumbing our nose at the law or the man - because each Lens on the clearnet Internet is still ultimately just a website someone is responsible for - but by making it so easy to pick up our work that it WILL by definition outlive us as long as even one person wants to keep something going from it.

It is the mark of the last library to fall, and the first Library.

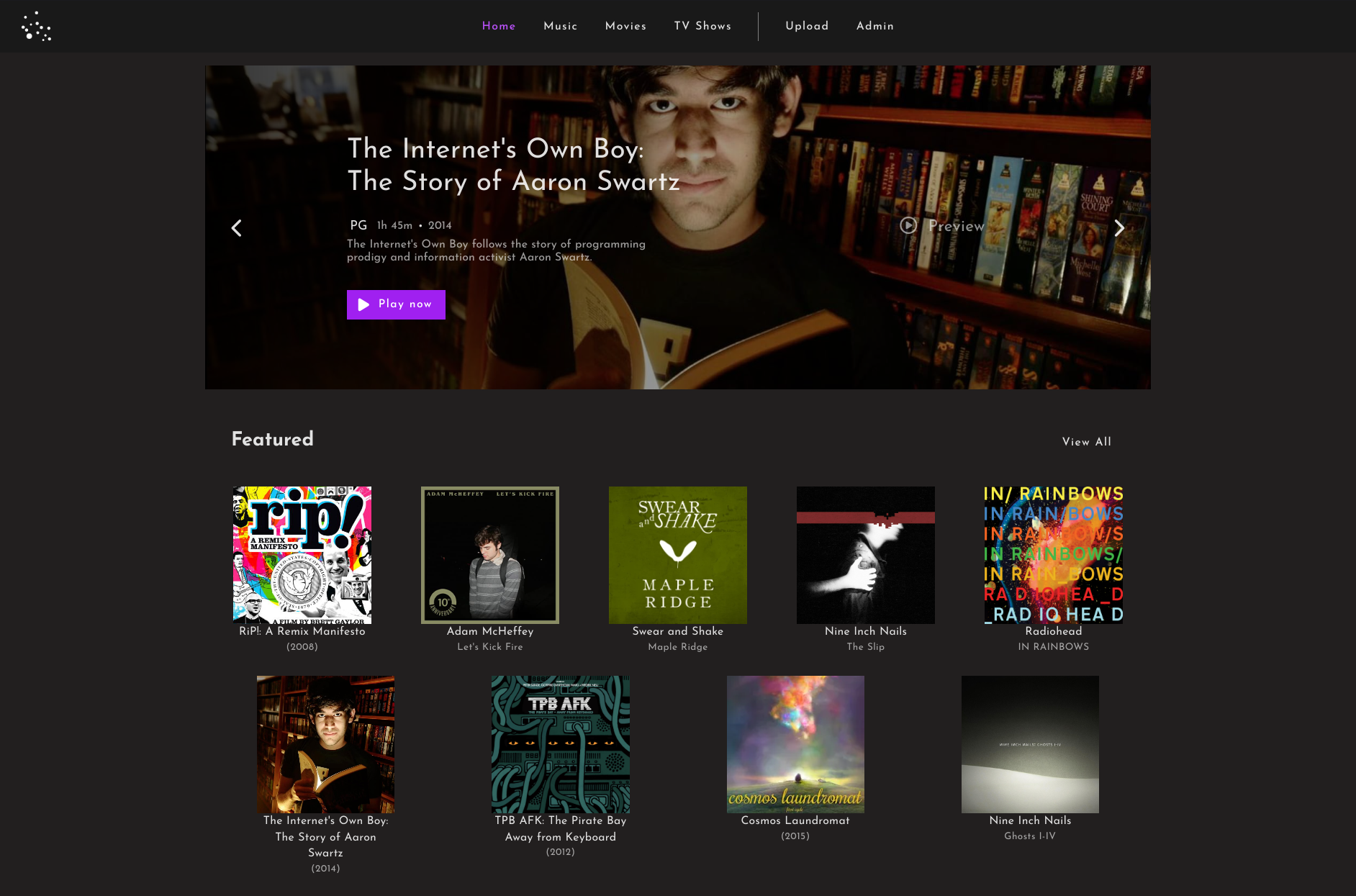

Riff.CC, The First Lens and the First Library

Riff.CC is the first Lens, and will be a purely Creative Commons and Public Domain Lens, holding the first and only unstoppable Library of copyleft and public domain works.

It performs faster than Netflix in some tests (buffering and loading speeds are perceptible improvements over Netflix under ideal conditions, and just about comparable in real world conditions).

It is fully open source, and as long as you follow the AGPL, you can use it as the base of your own project.

It is also fully interoperable with other Lenses, meaning you can follow another Lens and see their content in your feed, and you can merge with or split from them if you so desire.

Heck, you can actually use it today, while we're working on it! It's at https://orbiter.riff.cc - every single time we push a new change, and it passes tests, it makes it there.

We're putting some final polishes on it:

- Making sure every piece of content is playable, pulling together years of development

- Fixing a few quirks and fleshing out the user experience

- Bringing back true Defederation support

Once it's back, it'll look and feel EXACTLY as it does now - but what you won't know is that behind the scenes, we're just a Lens, with fully half of the content on the site existing on a completely different Lens - FTWC.xyz, with which we share no common infrastructure at all.

Our network of users, running Orbiter and IPFS + IPFS Cluster Follower, are the thing now supporting our entire site at that point.

And finally, Riff.CC will be considered publicly launched to the world at large - completing a dream that started in 2009.

Exciting, right? Well, it's just the beginning.

The Accretion Model

Think carefully - what is Defederation truly? Not a technical system - not really - Defederation is now a social protocol. And it encourages cooperation. We can build from that.

What if we could start an entire ecosystem around the original Riff.CC concept, split out the software into its own properly separated project and begin a "flywheel of development" where we could add new features and use cases to the platform at an exponential rate?

Simply put, we've made it so easy to bring ANY website into what we've built, and anything we can do with OrbitDB and IPFS is now something that can become a use case for Orbiter - an Orbiter Module.

Here's how it works:

- Riff Labs, as the main sponsor of Riff.CC so far, finds a client who wants their use case built and made available as an Orbiter Module.

- Riff Labs works with Riff.CC and external developers to build the use case for the client.

- We split it into small, individually useful components

- Then we allow them to configure components together to solve their use case as a whole module.

As people become drawn to the ecosystem, they add their interest or their own funding and components and modules, and the flywheel spins faster and faster.

Once developed further, Orbiter lets us:

- Near-instantly distribute upgraded versions of any Orbiter Module to all users

- Outperform just about any centralised solution

- Begin to accrete almost every use case into it, becoming a truly generalised solution

What can you do to help?

- Get started with The Riff Project. Join and follow our story at https://riff.cc and help us build the first ever open-source, global, collaborative, decentralized, censorship-resistant, end-to-end encrypted, collaborative media platform.

- Join the conversation on Discord - https://discord.gg/kWcHMwhkEb

- Share this document.

- Tell us what you think.

- Look at our code, find bugs and make suggestions - https://github.com/riffcc

If you're a company thinking about getting an Orbiter Module developed, we'd love to hear from you - team@riff.cc

Thanks so much for reading, we can't wait to get to know you. Let's build.

~ The Riff.CC team